Can efficiency and privacy in smart device be improved? Dr. Syed Ali Hassan at NUST utilised the power of Federated Learning and Over-the-Air techniques to enhance edge communication for 6G networks.

Edge Communication: Machine learning (ML) is remarkably transforming our world, enabling machines to learn from data and make intelligent decisions. This technology is not only revolutionizing industries like healthcare and finance but also playing a crucial role in enhancing wireless and edge communications. As our reliance on connected devices grows, the need for efficient, secure, and responsive communication systems becomes more pressing. This is where federated learning (FL) and edge computing come into play.

Edge communication involves processing data closer to where it is generated, such as on local devices or edge servers, rather than relying solely on centralized cloud servers. This approach reduces latency, improves response times, and enhances data privacy. However, it also presents unique challenges, particularly when it comes to managing limited computational resources and ensuring secure data handling. FL addresses these challenges by enabling multiple devices to collaboratively train a shared ML model without sharing their raw data. Instead, each device processes its data locally and sends only the model updates to a central server, which aggregates them to improve the global model.

Devices or Channels? Our research, conducted at the Information Processing and Transmission Lab at the School of Electrical Engineering and Computer Science (SEECS) in collaboration with the University of Calgary, Alberta, Canada, delves into the intricate dynamics of FL networks and undertook multiple research collaborations. Beginning first by focusing on the interplay between the number of edge devices and the configuration of convolutional neural network (CNN), particularly the number of channels in a shallow CNN architecture [1]. This investigation is motivated by the need to understand how varying combinations of edge devices and CNN configurations, under the influence of wireless channel noise on the weight vectors, impact the accuracy and convergence of the global model in FL scenarios. Specifically, we explore the number of communication rounds (CRs) necessary for achieving global model convergence under different convolutional layer channels and edge device participation setups. The insights gained from this analysis contribute to optimizing FL networks by identifying efficient configurations that balance model accuracy with communication efficiency.

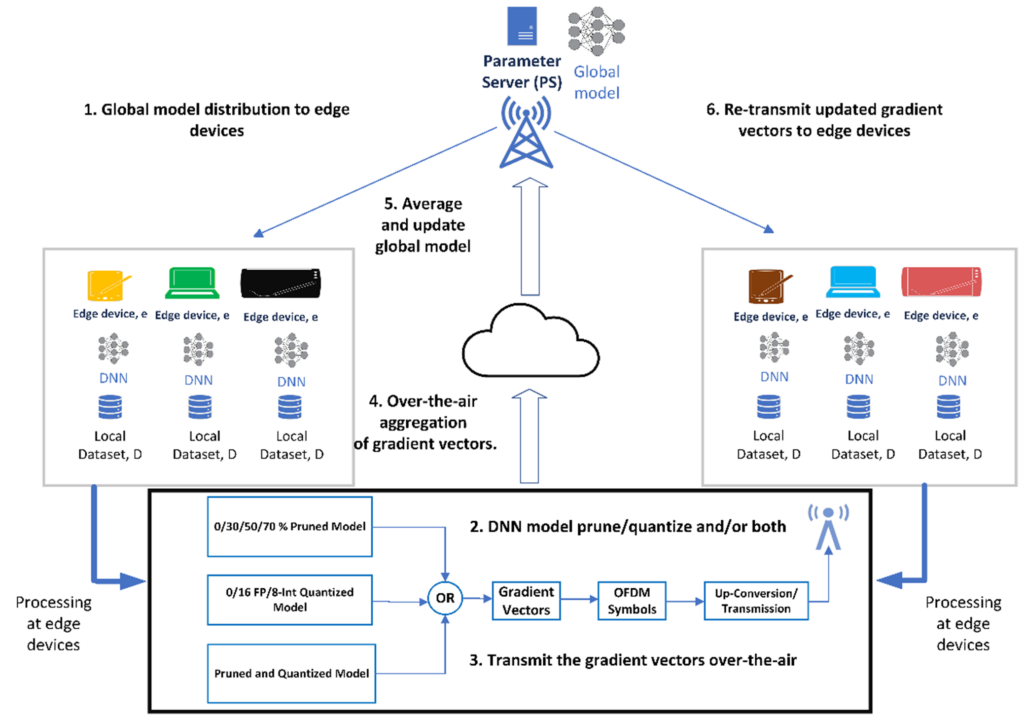

FL and Tactical Networks: In modern communications, over-the-air (OTA) communication is used because it allows devices to exchange model updates concurrently without the need for dedicated wireless resources for each device, thus enhancing communication efficiency. However, larger architectures of deep neural network (DNNs) pose challenges due to their significant computational burden. Compression techniques such as pruning and quantization-aware training can help reduce this burden by compressing the models, making them more feasible for deployment on edge devices with limited resources. One of the exciting applications of FL as shown in Figure 1, is within tactical networks, where computationally efficient OTA aggregation is used to train a global model at a parameter server (PS) [2]. In such setups, memory-limited edge devices need to store DNN models that are both compact and highly accurate. Our research further explores how pruning reduces model size by removing unnecessary parameters and affects the accuracy of these models when aggregated OTA. We also explore the impact of Rayleigh fading and additive white Gaussian noise (AWGN) on the model’s accuracy at different signal-to-noise ratios (SNRs). Simulation results indicate that at high SNRs, pruned models maintain accuracy comparable to uncompressed models, with significant reductions in size, making them ideal for resource-constrained edge devices.

Deep Compression for OTA-FL: We further investigated the application of compression techniques in OTA-FL setup on two standard DNN models, i.e., ResNet50 and InceptionV3, where we proposed a compression pipeline combining pruning and quantization-aware training [3]. This approach significantly reduces both computation and communication requirements while maintaining model accuracy. Our experiments on these models on two different datasets, i.e., MNIST and CIFAR-10, under varying SNRs and client numbers, showed that deep compression could achieve up to 80 percent reductions in model size with negligible accuracy loss.

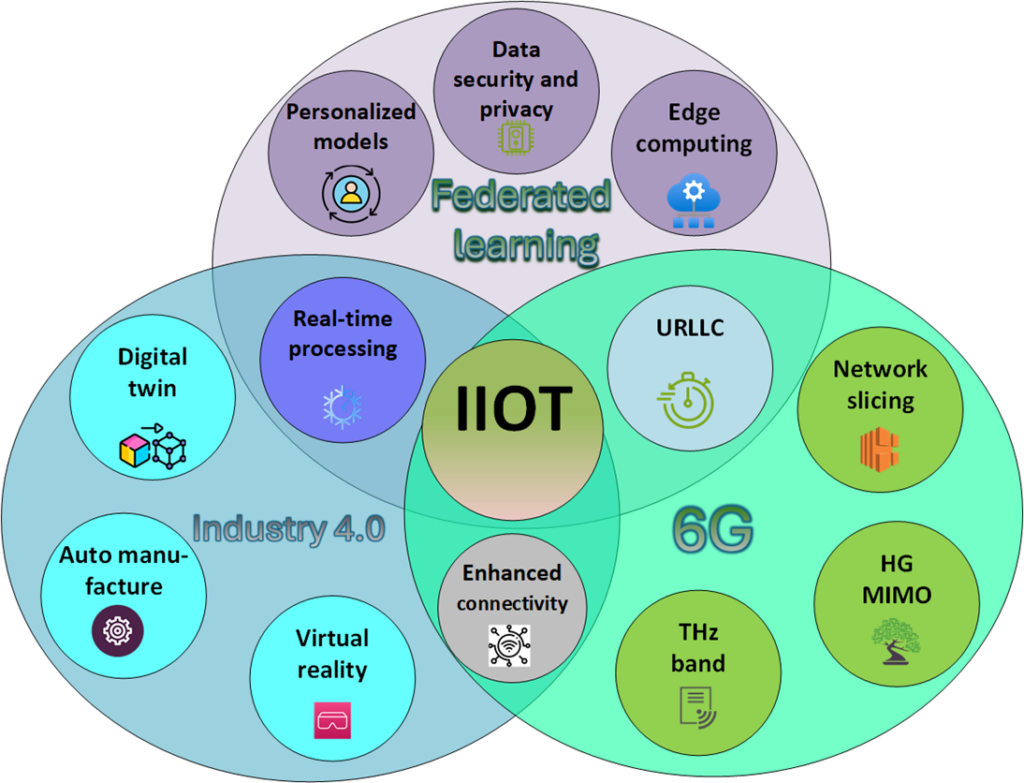

FL in IIoT: FL and the industrial Internet of Things (IIoT) as shown in Figure 2, are pivotal in driving the future of Industry 4.0 and 6G networks, integrating real-time processing, digital twins, and virtual reality into manufacturing and communication systems. FL’s ability to provide personalized models and ensure data privacy while leveraging edge computing aligns seamlessly with the demands of IIoT. Moreover, the incorporation of ultra-reliable low-latency communication, network slicing, and enhanced connectivity within 6G frameworks highlights the critical role these technologies play in achieving seamless, efficient, and secure industrial operations. FL enhances IIoT by enabling edge sensors or peripheral intelligence units (PIUs) to process data locally, ensuring data privacy and security. However, PIUs also face constraints like limited memory, computational power, bandwidth restrictions, and environmental noise. To optimize DNN models for PIUs, by further reducing their size and intact accuracy, we employ iterative magnitude pruning, a more reformed compression technique within an OTA-FL network [4]. This improves DNN efficiency by removing unnecessary connections iteratively, hence ensuring that the models remain compact and effective for deployment in resource-constrained environments of IIoT.

Performance Enhancement in FL for Non-IID Data: FL tasks are often challenged by non-independent and identically distributed (non-IID) data across edge devices, which means that the data generated by each device can vary significantly in distribution. This non-IID nature can lead to discrepancies in model updates, causing slower convergence and reduced global model performance. Our research also focuses on OTA-FL for edge devices with non-IID datasets. The standard federated averaging algorithm often underperforms with non-IID data, reducing global model performance. We investigated how pruning and channel impairments affect OTA-FL in a non-IID setup, finding significant degradation for pruning rates over 50 percent [5]. To mitigate this, we explored two approaches: utilizing FedProx, a federated aggregation algorithm designed for non-IID data, and iteratively retraining the model at the PS using a representative dataset. Our simulations showed that these methods could achieve considerable gains, especially when pruning is not too aggressive and for less complex classification tasks.

In summary, our work in collaboration with the University of Calgary, Canada demonstrates the potential of FL, OTA aggregation, and model compression techniques to revolutionize edge communication and computing. By addressing the challenges of limited resources and ensuring efficient, secure data processing, these advancements pave the way for more robust and responsive communication systems. As we continue to refine these technologies, the future of wireless and edge communication looks promising, with enhanced performance and greater adaptability to diverse real-world scenarios.

References:

- F. M. A. Khan, S. A. Hassan, R. I. Ansari, and H. Jung, “Analyzing Convergence Aspects of Federated Learning: More Devices or More Network Layers?” 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 2022, pp. 1-5, doi: 10.1109/VTC2022-Spring54318.2022.9860649.

- F. M. Ali Khan, H. Abou-Zeid, and S. A. Hassan, “Model Pruning for Efficient Over-the-Air Federated Learning in Tactical Networks,” 2023 IEEE International Conference on Communications (ICC), Rome, Italy, 2023, pp. 1806-1811, doi: 10.1109/ICCWorkshops57953.2023.10283773.

- F. M. A. Khan, H. Abou-Zeid, and S. A. Hassan, “Deep Compression for Efficient and Accelerated Over-the-Air Federated Learning,” in IEEE Internet of Things Journal (IF=10.6), doi: 10.1109/JIOT.2024.3373460.

- F. M. A. Khan, H. Abou-Zeid, A. Kaushik, and S. A. Hassan, “Advancing IIoT with Over-the-Air Federated Learning: The Role of Iterative Magnitude Pruning,” accepted for publication in Q3 2024 of IEEE Internet of Things Magazine.

- F. M. Ali Khan, H. Abou-Zeid, and S. A. Hassan, “Enhancing the performance of Model Pruning in Over-the-Air Federated Learning with Non-IID Data,” in the proceedings of IEEE International Conference on Communications (ICC), Denver, USA, 2024.

The author is a PhD student at School of Electrical Engineering and Computer Science (SEECS), supervised by Prof. Dr. Syed Ali Hassan, National University of Sciences and Technology (NUST).

Research Profile: https://bit.ly/4fbgXBr

![]()