According to the World Health Organization around 15% of the world’s population has some form of disability [1] and according to a later report in 2016 about 1 billion people need assistive products. Furthermore, according to a report by the International Council of Nurses in May 2022, there is about shortage of 13 million nursing staff. In Pakistan, there are approximately 31 million people with disabilities. Despite medical advancement, there is a significant nursing time per person including different kinds of support depending on patients’ conditions. Among these people specifically the disabled, elderly and patients with contagious diseases face difficulty in their activities of daily life among which one of the most important is eating meals. Such people require a considerable amount of support in their activities and being able to live a good life.

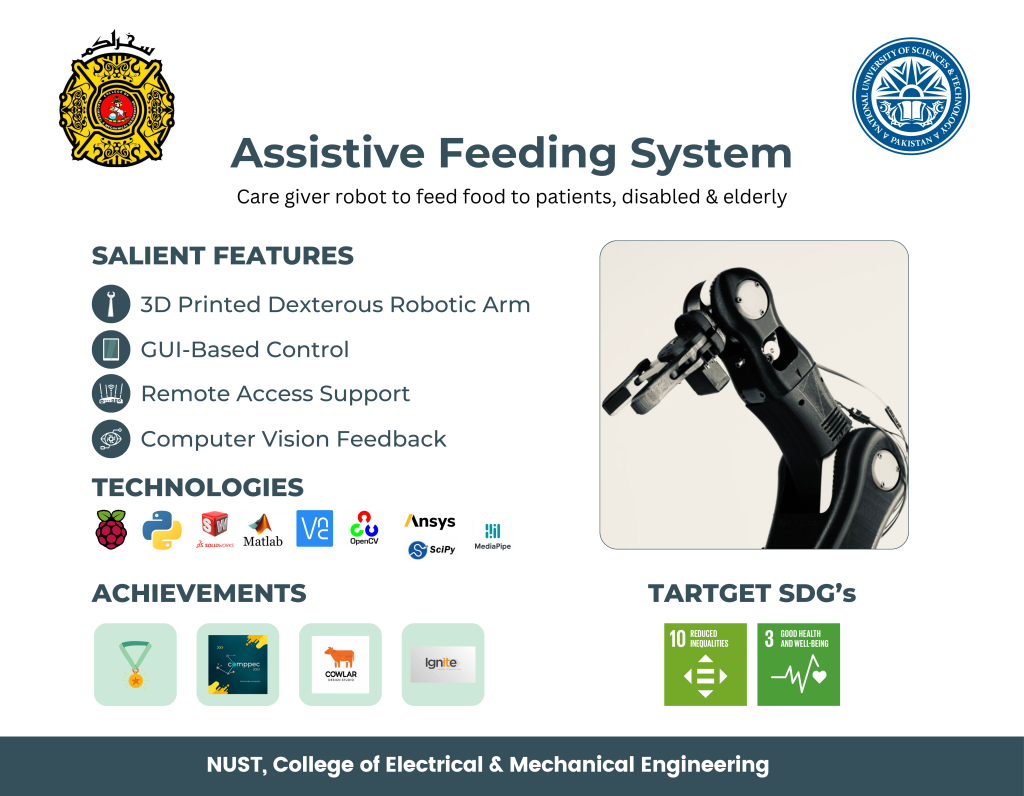

To address the aforementioned problem and to achieve sustainable development goals, a research and development project funded by IGNITE Technology Fund titled “Assistive Feeding System” was worked on with the following objectives:

- To manufacture a 3D-printed serial robotic manipulator with a custom end-effector for holding utensils, for assistive feeding.

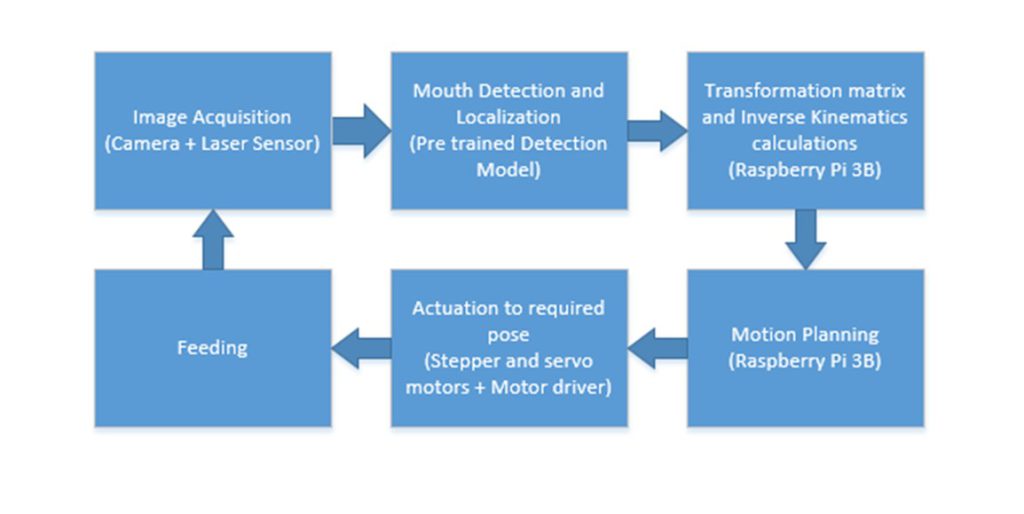

- To develop firmware for the control system of the robot based on computer vision-based feedback and coupled with inverse kinematics-based control to feed food.

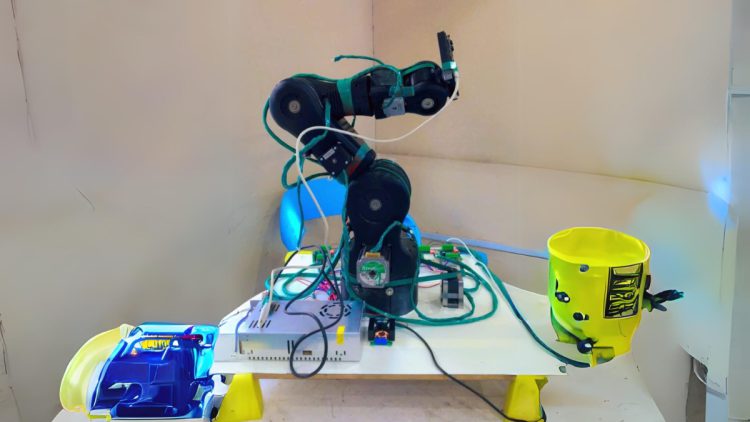

The Assistive Feeding System is a 6 Degree of Freedom fixed base open-source robotic arm with active assistance. The manipulator is 3D printed by fused deposition modelling technique using PLA+ material and introduces a counterweight mechanism to reduce the motor torque requirements hence reducing the power consumption of the system. The system is further sub-divided into 2 sub-systems, which are a vision system based on an ETRON eSP870u camera to get the location of the mouth of the target person and a delivery system which uses manipulator and control systems to feed food using Raspberry Pi 4B. In the final system, there are two main modes: passive feeding and active feeding. The user can choose runtime and change modes as per requirement.

The working of the robot is such that initially, the robot calibrates the joint positions and waits for the user to command for scooping or feeding the food. In the case of scooping, the robot moves to a fixed position where the food is present and scoops. After the user presses the feed button the robot uses computer vision to find the mouth of the person and using inverse kinematics-based control moves toward the mouth, when it is near the person the depth calculation is shifted toward a level 1 laser sensor to accurately check the distance and avoid any hitting. The scooping and feeding are not fully automated keeping in view that chewing time is different in different cases. Furthermore, the user control is versatile including GUI-based control and wireless control using mobile phones or laptops.

In conclusion, the Assistive Feeding System is a proof of concept for both active and passive feeding. The approach to manufacturing the robot using 3D printing and the introduction of a new mechanism not only reduces the cost of the robot but also removes the hurdles for local manufacturing. Furthermore, the methodology of using a monocular camera further reduces costs as no stereo cameras are required. Overall, with the aforementioned methodology, we were successful in feeding rice to the subject user.

Video 1: Video Demonstration of Assistive Feeding Robotic Arm

References

1. https://www.who.int/disabilities/world_report/2011/report/en/

Acknowledgement

We would like to thank BCN 3D, for the base design of the robotic manipulator.

The student group which worked on the project includes Usama Jahangir (Team Lead), Muhammad Fahad Aamir and Wajid Ali. The project was supervised by Dr Mohsin Islam Tiwana and Co-Supervised by Dr Hamid Jabbar. The supervisor can be reached at [email protected].

![]()